Call for papers in PDF format

Call for papers in PDF format

Proceedings published in the Springer LNCS Series, Volume 13491

Proceedings published in the Springer LNCS Series, Volume 13491

|

Edificio del Rectorado Av. de Cervantes, 2 29016, Málaga, Spain. |

|

ANTS 2022 (Attn: Dr. Manuel López-Ibáñez) Málaga, Spain |

email: manuel.lopez-ibanez@manchester.ac.uk |

|

ANTS 2022 (Attn: Dr. Jose García Nieto) Málaga, Spain |

email: jnieto@uma.es |

The conference venue is located 1 close to the centre of Málaga

and the touristic harbor, which offer a very large selection

of restaurants, tapas bars and local street food. You will

for sure find something to satisfy your appetite! Zoom in the map and choose your favourite place ![]() . Beware that not all

restaurants are open for lunch, but the offer is still very

large.

. Beware that not all

restaurants are open for lunch, but the offer is still very

large.

The social dinner will take place at "La Reserva del Olivo" 2

| 8:30 - 16:00 | Registration | |||||||||

| 8:30 - 9:00 | Welcome | |||||||||

| 9:00 - 10:00 | Invited plenary talk The 5 most popular artificial neural networks Jürgen Schmidhuber, Director, AI Initiative, KAUST; Scientific Director, Swiss AI Lab IDSIA; Adj. Prof. of Artificial Intelligence, USI; Co-Founder & Chief Scientist, NNAISENSE (Chair: Marco Dorigo) |

|||||||||

| 10:00 - 10:40 | Coffee break | |||||||||

| 10:40 - 12:00 | Session 1: Oral presentations (Chair: Michael Allwright)

|

|||||||||

| 12:00 - 14:00 | Lunch (on your own) | |||||||||

| 14:00 - 15:20 | Session 2: Oral presentations (Chair: Eliseo Ferrante)

|

|||||||||

| 15:20 - 15:45 | Session 3: Preview highlights (Chair: Francisco Luna)

|

|||||||||

| 15:45 - 18:30 | Poster session 1 + drinks and appetisers: Papers and previews presented in Sessions 1, 2, and 3 | |||||||||

| 9:00 - 10:00 | Invited plenary talk Swarm Robotics Across Scales: Engineering Complex Behaviors Giovanni Beltrame, Polytechnique Montreal (Chair: Giovanni Reina) |

|||||||||

| 10:00 - 10:40 | Coffee break | |||||||||

| 10:40 - 11:40 | Session 4: Oral presentations (Chair: Sanaz Mostaghim)

| |||||||||

| 11:40 - 14:00 | Lunch (on your own) | |||||||||

| 14:00 - 15:40 | Session 5: Oral presentations (Chair: Giovanni Beltrame)

|

|||||||||

| 15:20 - 15:45 | Session 6: Preview highlights

(Chair: José Manuel García Nieto)

|

|||||||||

| 15:45 - 18:30 | Poster session 2 + drinks and appetisers: Papers and previews presented in Sessions 4, 5 and 6 | |||||||||

| 20:00-22:00 | Social Dinner at "La Reserva del Olivo" Address: Pl. del Carbón, 2, 29015 Málaga, Spain (Directions) |

|||||||||

| 9:00 - 10:00 | Invited plenary talk Mexican Waves: The Adaptive Value of Collective Behaviour Jens Krause, Humboldt University and Leibniz-Institute of Freshwater Ecology and Inland Fisheries (Chair: Heiko Hamann) |

|||||||

| 10:00 - 10:40 | Coffee break | |||||||

| 10:40 - 11:40 | Session 7: Oral presentations (Chair: Volker Strobel)

| |||||||

| 11:40 - 13:40 | Lunch (on your own) | |||||||

| 13:40 - 14:00 | Session 8: Preview highlights

(Chair: Manuel López-Ibáñez)

|

|||||||

| 14:00 - 16:30 | Poster session 3 + drinks and appetisers: Papers and previews presented in Sessions 7 and 8 | |||||||

| 16:30 - 17:00 | Award ceremony and conclusion (Chair: Heiko Hamann) | |||||||

The ANTS2022 registration fee is 450 EUR.

The conference fee includes:

Coffee breaks and a conference dinner will be offered by the organizing committee.

Please go to the registration page and follow the instructions therein.

Abstract: Modern Artificial Intelligence is dominated by artificial neural networks (NNs) and deep learning. I am proud that foundations of the most popular NNs originated in my labs. Here I discuss: (1) Long Short-Term Memory (LSTM), the most cited NN of the 20th century, (2) ResNet, the most cited NN of the 21st century (an open-gated version of our Highway Net: the first working really deep feedforward NN), (3) AlexNet and VGG Net, the 2nd and 3rd most frequently cited NNs of the 21st century (both building on our similar earlier DanNet: the first deep convolutional NN to win image recognition competitions), (4) Generative Adversarial Networks (an instance of my earlier Adversarial Artificial Curiosity), and (5) variants of Transformers (Transformers with linearized self-attention are formally equivalent to my earlier Fast Weight Programmers). Most of this started with our Annus Mirabilis of 1990-1991 when compute was a million times more expensive than today. In the 2010s, all of this work was feverishly built on by an outstanding community of machine learning researchers, engineers, and practitioners to create amazing things that have impacted the lives of billions of people worldwide.

Bio: Since age 15 or so, the main goal of professorJürgen Schmidhuber has been to build a self-improving Artificial Intelligence (AI) smarter than himself, then retire. He is often called the "father of modern AI" by the media. His lab's Deep Learning Neural Networks (NNs) based on ideas published in the "Annus Mirabilis" 1990-1991 have revolutionised machine learning and AI. In 2009, the CTC-trained Long Short-Term Memory (LSTM) of his team was the first recurrent NN to win international pattern recognition competitions. In 2010, his lab's fast and deep feedforward NNs on GPUs greatly outperformed previous methods, without using any unsupervised pre-training, a popular deep learning strategy that he pioneered in 1991. In 2011, the DanNet of his team was the first feedforward NN to win computer vision contests, achieving superhuman performance. In 2012, they had the first deep NN to win a medical imaging contest (on cancer detection). This deep learning revolution quickly spread from Europe to North America and Asia, and attracted enormous interest from industry. By the mid 2010s, his lab's NNs were on 3 billion devices, and used billions of times per day through users of the world's most valuable public companies, e.g., for greatly improved speech recognition on all Android smartphones, greatly improved machine translation through Google Translate and Facebook (over 4 billion LSTM-based translations per day), Apple's Siri and Quicktype on all iPhones, the answers of Amazon's Alexa, and numerous other applications. In May 2015, his team published the Highway Net, the first working really deep feedforward NN with hundreds of layers—its open-gated version called ResNet (Dec 2015) has become the most cited NN of the 21st century, LSTM the most cited NN of the 20th (Bloomberg called LSTM the arguably most commercial AI achievement). His lab's NNs are now heavily used in healthcare and medicine, helping to make human lives longer and healthier. His research group also established the fields of mathematically rigorous universal AI and recursive self-improvement in metalearning machines that learn to learn (since 1987). In 1990, he introduced unsupervised generative adversarial neural networks that fight each other in a minimax game to implement artificial curiosity (the famous GANs are instances thereof). In 1991, he introduced neural fast weight programmers formally equivalent to what's now called Transformers with linearized self-attention (Transformers are popular in natural language processing and many other fields). His formal theory of creativity & curiosity & fun explains art, science, music, and humor. He also generalized algorithmic information theory and the many-worlds theory of physics, and introduced the concept of Low-Complexity Art, the information age's extreme form of minimal art. He is recipient of numerous awards, author of about 400 peer-reviewed papers, and co-founder and Chief Scientist of the company NNAISENSE, which aims at building the first practical general purpose AI. He is a frequent keynote speaker, and advising various governments on AI strategies.

Abstract: Swarm robotics relies on many simple robots, governed by local interactions to implement complex behaviours, generally inspired by biological systems. What are the ingredients necessary for the of design artificial swarms for practical applications? This talk will present some of the challenges of practical swarms, identifying in particular the limitations of a leaderless hierarchy, where robots have only a partial view of their mission, as well as ways to circumvent these limitations by exploiting heterogeneous swarms. This talk will use several practical examples, including the control of smart materials, search and rescue missions, and space exploration.

Bio: Giovanni Beltrame obtained his Ph.D. in Computer Engineering from Politecnico di Milano, in 2006 after which he worked as microelectronics engineer at the European Space Agency on a number of projects spanning from radiation-tolerant systems to computer-aided design. In 2010 he moved to Montreal, Canada where he is currently Professor at Polytechnique Montreal with the Computer and Software Engineering Department. Dr. Beltrame directs the MIST Lab, with more than 20 students and postdocs under his supervision. He has completed several projects in collaboration with industry and government agencies in the area of robotics, disaster response, and space exploration. His research interests include modeling and design of embedded systems, artificial intelligence, and robotics, on which he has published research in top journals and conferences.

Abstract: The collective behaviour of animals has attracted considerable attention in recent years, with many studies exploring how local interactions between individuals can give rise to global group properties. The functional aspects of collective behaviour are less well studied, especially in the field and relatively few studies have investigated the adaptive benefits of collective behaviour in situations where prey are attacked by predators. This paucity of studies is unsurprising because predator-prey interactions in the field are difficult to observe. Furthermore, the focus in recent studies on predator-prey interactions has been on the collective behaviour of the prey rather than on the behaviour of the predator. Here I present a field study that investigated the antipredator benefits of waves produced by fish at the water surface when diving down collectively in response to attacks of avian predators. Fish engaged in surface waves that were highly conspicuous, repetitive, and rhythmic involving many thousands of individuals for up to 2 min. Collective fish waves increased the time birds waited until their next attack and also reduced capture probability in three avian predators that greatly differed in size, appearance and hunting strategy. Taken together, these results support a generic antipredator function of fish waves which could be a result of a confusion effect or a consequence of waves acting as a perception advertisement, which requires further exploration.

Bio: Jens Krause is a behavioural ecologist with a strong interest in collective behaviour and social networks. He has published a number of books (Living in Groups, OUP; Fish Cognition and Behavior, Wiley/Blackwells; Exploring Animal Social Networks, PUP; Animal Social Networks, OUP) and articles on the mechanisms and functions of living in groups. He is currently professor for fish biology and ecology in the Faculty of Life Science at the Humboldt University Berlin, Head of Department at the Leibniz-Institute of Freshwater Ecology and Inland Fisheries and on the executive board of the excellence cluster “Science of intelligence”. He started his university education at the Free University Berlin and obtained his PhD from St. John’s College Cambridge, UK, followed by postdocs at Mount Allison University, Canada, and Princeton University, USA, and held a professorship for behavioural ecology at Leeds University, UK, for several years before moving back to Berlin in 2009. In 2014 he was elected into the Berlin-Brandenburgische Akademie der Wissenschaften.

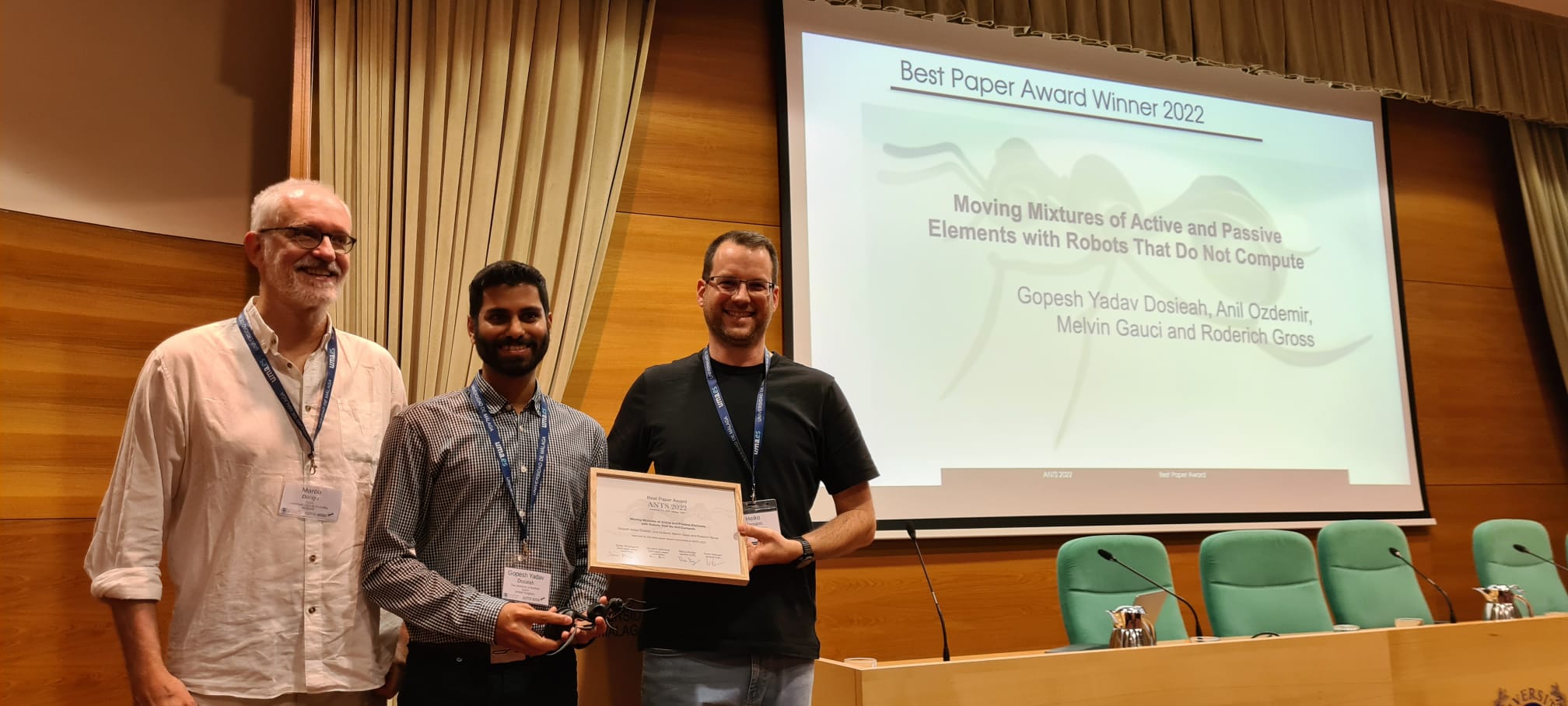

Continuing with a tradition started at ANTS 2002, the "Best Paper Award" at ANTS 2022 consists of a sculpture of an ant specially made for the ANTS conference series by the Italian sculptor Matteo Pugliese.

The best paper award has been graciously sponsored by Technology Innovation Institute and Springer LNCS.

| Best Paper Award Ceremony | Nominees and the Winner (in bold) | |

|

Dynamic Spatial Guided Multi-Guide Particle Swarm Optimization Algorithm for Many-Objective Optimization Weka Steyn and Andries Engelbrecht |

|

|

Moving Mixtures of Passive and Active Elements with Robots That Do Not Compute Gopesh Yadav Dosieah, Anil Ozdemir, Melvin Gauci and Roderich Gross |

||

|

Automatic Design of Multi-Objective Particle Swarm Optimizers with jMetal and irace Daniel Doblas, Antonio J. Nebro, Manuel López-Ibáñez, José García-Nieto and Carlos A. Coello Coello |

Each oral presentation will last 20 minutes sharp (15

minutes presentation plus 5 minutes for questions-and-answers).

Papers presented orally will be also presented during the

poster session on the same day of the presentation. Please

refer to the on-line program for the details.

Posters should be of size A0 portrait. There is no standard template for the poster, every author can choose what best fits their work. Material to fix the poster on the stand will be available.

Papers that are not presented orally will be introduced

by the author in a 2 minute highlight, using a SINGLE

slide. This slide must be sent to us in advance. We will

preload this slide onto the computer and project it for

you during your presentation.

Paper submissions are now closed

Final submission deadline: April 30, 2022

Submissions may be a maximum of 11 pages, excluding references, when typeset in the LNCS Springer LaTeX template. Submissions should be a minimum of 7 full pages.

This strict page limit includes figures, tables, and all supplementary sections (e.g., Acknowledgements). The only exclusion from the page limit is the reference list, which should be of any length that properly positions the paper with respect to the state of the art.

Papers should be prepared in English, in the LNCS Springer LaTeX style, using the default font and font size. Authors should consult Springer’s authors’ guidelines and use their proceedings template for LaTeX, for the preparation of their papers. Please download the LNCS Springer LaTeX template package (zip, 309 kB) and authors' guidelines (pdf, 191 kB) directly from the Springer website. Please also download and consult the ANTS 2022 sample LaTeX document (zip, 129kB), which shows the correct options to use within the Springer template.

Submissions that do not respect these guidelines will not be considered.

Note: Authors may find it convenient that Springer’s proceedings LaTeX templates are available in Overleaf

The initial submission must be in PDF format.

Please note that in the camera-ready phase, authors of accepted papers will need to submit both a compiled PDF and all source files (including LaTeX files and figures).

The camera-ready phase will have more detailed formatting requirements than the initial submission phase. Authors are invited to consult these camera-ready instructions preemptively.

Submitted papers will be peer-reviewed on the basis of technical quality, relevance, significance, and clarity. If a submission is not accepted as a full length paper, it may still be accepted either as a short paper or as an extended abstract. In such cases, authors will be asked to reduce the length of the submission accordingly. Authors of all accepted papers will be asked to execute revisions, based on the reviewers’ comments.

Accepted papers are to be revised and submitted as a camera-ready version. Reviewers’ comments should be taken into account and should guide appropriate revisions. The camera-ready submission must include the compiled PDF and all source files needed for compilation—including the LaTex file, reference file, and figures.

By submitting a camera-ready paper, the author(s) agree that at least one author will attend the conference and give a presentation of the paper. At least one author must be registered by the deadline for camera-ready submissions.

Camera-ready submissions that do not comply with all given requirements might have to be excluded from the conference proceedings.

After formatting the paper as explained below, please follow the subsequent instructions for submitting a zip/tar file that contains your camera-ready paper sources:

Deadline for submission: June 29, 2022

Papers must be prepared in the LNCS Springer LaTeX style, using the default font and font size. Authors should consult Springer’s authors’ guidelines and use their proceedings template for LaTeX, for the preparation of their papers. Please download the LNCS Springer LaTeX template package (zip, 302 kB) and authors' guidelines (pdf, 192 kB) directly from the Springer website. The LaTeX class and references style (llncs.cls and splncs04.bst) included in this package should not be modified. Please also download and consult the ANTS 2022 sample LaTeX document (zip, 129 kB), which shows the correct options to use within the Springer template.

Your submission should be uploaded as a compressed archive (zip, tgz), containing the final camera-ready versions of the following:

Figures should be in their original vector format (pdf, eps), if applicable. Otherwise, figures provided in raster format (png, jpeg, tiff, bmp) must be high-resolution (if including linework, at least 800 dpi at the final size, otherwise, at least 300 dpi at the final size).

Although figures in the digital proceedings will be in full color, the print proceedings of ANTS 2022 will be printed in grayscale. Authors should therefore ensure that their figures will be appropriately legible when printed in grayscale.

Springer encourages authors to include their ORCIDs in their papers. In addition, the corresponding author of each paper, acting on behalf of all of the authors of that paper, must complete and sign a Consent-to-Publish form. The corresponding author signing the copyright form should match the corresponding author marked on the paper. Once the files have been sent to Springer, changes relating to the authorship of the papers cannot be made.

Full-length Papers are strictly limited to 11 pages + references, and Short Papers are strictly limited to 7 pages + references.

These page limits include figures, tables, and all supplementary sections (e.g., Acknowledgements). The only exclusion from these page limits is the reference list, which should have an appropriate length with respect to the state of the art.

Extended Abstracts are strictly limited to 2 pages (including references).

All page limits refer to papers prepared in the LNCS Springer LaTeX template, according to the instructions provided here. Do not modify the template defaults, such as those for margins, line spacing, or font size.

Submissions must be prepared in LaTeX, and the source files (tex, bib, bbl) must be provided. Authors should use the LaTeX class and references style files (llncs.cls and splncs04.bst) as provided in the LNCS Springer LaTeX template package. These files should not be modified. Also, do not add formatting modifications to the main document (tex) to override the template defaults. Do not use, for instance, any line spacing modifications (e.g., \vspace{} or \\*[0pt]), or font size modifications (e.g., \fontsize{}). Please do not add any special fonts. Please do not add packages or custom commands that change the formatting (e.g., do not use the package subcaption, as it overrides the default caption formatting in the template).

During the final preparation of the proceedings, any formatting modifications in the main document (tex) will be removed if they do not match the template, potentially causing a change in paper length. Springer will also recompile all papers using their original llncs class file (llncs.cls). If authors make any modifications to the llncs file, their paper will not compile correctly in the final step, and cannot be included in the proceedings.

References must be formatted using the provided references style file (splncs04.bst). In this references style, in-text citations will appear as numbers, and the numbered reference list will be ordered alphabetically.

For further information, please refer to the class documentation included in the LNCS Springer LaTex package, and to the LNCS Springer authors’ guidelines.

It is mandatory that submissions to ANTS 2022 follow certain options within the LNCS Springer template. The ANTS 2022 sample LaTeX document (zip) shows the correct template options to use. These mandatory template options are as follows.

Running header:

\documentclass[runningheads]{llncs}.

\authorrunning{}, give the initial of the first name(s) and the full surname. Always give the first author's name. If there are precisely two authors, then give both the first and second authors' names. If there are more than two authors, use ‘et al.’ after the name of the first author.

\titlerunning{Abbreviated paper title}.

Title and headings:

\newline command with the title.

Author names and affiliations:

\author{} field, provide the full first name (not only the initial).

\orcidID{} within the LaTex field \author{}.

\institute{} and \email{} fields: department, faculty, university, company (if applicable), city, country, and email address. Do not include the street address or ZIP code (it is not a postal address). The email address of the corresponding author is mandatory to include in the \institute{} and \email{} fields.

\institute{} entries, include an \index{} entry for each author, giving the full surname, followed by the full first name(s).

For further details, please refer to the documentation of the llncs class.

Acknowledgements:

Keywords:

For contributions accepted as Extended Abstracts:

Conference proceedings are published by Springer in the LNCS series, Volume 13491.

The journal Swarm Intelligence will publish a special issue dedicated to ANTS 2022 that will contain extended versions of the best research works presented at the conference.